I’ve been playing with the database snapshot feature on one of my lab SQL 2016 servers, when suddenly the snapshot operations started to throw me some weird errors:

Msg 5901, Level 16, State 1, Line 1 One or more recovery units belonging to database 'ci_dbUp' failed to generate a checkpoint. This is typically caused by lack of system resources such as disk or memory, or in some cases due to database corruption. Examine previous entries in the error log for more detailed information on this failure. Msg 1823, Level 16, State 2, Line 1 A database snapshot cannot be created because it failed to start. Msg 211, Level 23, State 8, Line 1 Corruption in database ID 8, object ID 41 possibly due to schema or catalog inconsistency. Run DBCC CHECKCATALOG.

Well, hello there. To be honest, the server I was playing on was one of those servers that might easily lose battle to one of modern calculators, in other words, old SCSI drive “potato” server with decent number of cores and non-existing disk performance. Which, probably, triggered this corruption, because I was doing multiple attempts of snapshot creation -> restore from snapshot -> remove snapshot chain and running a restore operation in parallel. Each snapshot operation (that usually is instantaneous) was taking about 30 seconds.

One of them apparently was fatal for the database. So, what’s the plan, Doc?

Diagnostics

Since it’s a pretty small database, I can go with full-blown DBCC CHECKDB with EXTENDED_LOGICAL_CHECKS (you can consider running lighter checks first though, see https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-checkdb-transact-sql):

DBCC CHECKDB ('ci_dbUp') WITH NO_INFOMSGS, ALL_ERRORMSGS, EXTENDED_LOGICAL_CHECKS

Msg 5901, Level 16, State 1, Line 1 One or more recovery units belonging to database 'ci_dbUp' failed to generate a checkpoint. This is typically caused by lack of system resources such as disk or memory, or in some cases due to database corruption. Examine previous entries in the error log for more detailed information on this failure. Msg 1823, Level 16, State 2, Line 1 A database snapshot cannot be created because it failed to start. Msg 1823, Level 16, State 8, Line 1 A database snapshot cannot be created because it failed to start. Msg 7928, Level 16, State 1, Line 1 The database snapshot for online checks could not be created. Either the reason is given in a previous error or one of the underlying volumes does not support sparse files or alternate streams. Attempting to get exclusive access to run checks offline. Msg 211, Level 23, State 8, Line 1 Corruption in database ID 8, object ID 41 possibly due to schema or catalog inconsistency. Run DBCC CHECKCATALOG.

DBCC CHECKCATALOG gives pretty much the same output.

SQL Server logs: same error number, memory dumps, no specifics.

I could try doing a restore (or partial restore), but the database is not in a logged mode, which would enable me to go with page restore. Also, msdb.dbo.suspect_pages table shows me zero suspected pages, so probably nothing that can be done here except for a full restore to the previous night.

Backup source files

Before doing any kind of recovery, it’s always a good idea to create a backup of your database files. Regular backup probably won’t do – you generally want a physical copy of the files, but we can still have it – just in case:

BACKUP DATABASE ci_dbUp TO DISK = 'd:\temp\ci_dbUp.bak'

Msg 5901, Level 16, State 1, Line 1 One or more recovery units belonging to database 'ci_dbUp' failed to generate a checkpoint. This is typically caused by lack of system resources such as disk or memory, or in some cases due to database corruption. Examine previous entries in the error log for more detailed information on this failure. Msg 3013, Level 16, State 1, Line 1 BACKUP DATABASE is terminating abnormally. Msg 211, Level 23, State 8, Line 1 Corruption in database ID 8, object ID 41 possibly due to schema or catalog inconsistency. Run DBCC CHECKCATALOG.

Hmm, okay. How about physical file copy? It will, however, require you to put the database into the OFFLINE mode, which means it probably won’t come back online willingly, as all the databases are required to pass the basic consistency checks before doing so, which obviously won’t happen this time. From here on, we’ll have to work with the database in an emergency mode, which disallows any access to the database.

ALTER DATABASE ci_dbUp SET OFFLINE WITH ROLLBACK IMMEDIATE

.. did not work!

Corruption in database ID 8, object ID 41 possibly due to schema or catalog inconsistency. Run DBCC CHECKCATALOG.

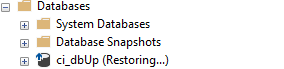

Alrighty then, let’s shut down the whole server and copy the database files while it’s offline. That, of course, worked, but now we have our database in a ‘failed to recover’ state:

Surprisingly, it did not perform any consistency checks and simply put the database in the ‘Restoring’ mode:

The database 'ci_dbUp' is marked RESTORING and is in a state that does not allow recovery to be run.

Hmm, apparently, re-do log failed to apply after restart. Which means, it makes perfect sense to reconstruct the log file. Since I have the original files copied now, I would work on copies instead of originals.

Attach the database without the log file

Let’s try to re-attach the files with ATTACH_REBUILD_LOG parameter:

CREATE DATABASE ci_dbUp2 ON ( name = 'ci_dbUp', filename = 'I:\MSSQL13.MSSQLSERVER\ci_dbUp2.mdf'), ( name = 'ci_dbUp_log', filename = 'J:\MSSQL13.MSSQLSERVER\ci_dbUp2.ldf') FOR ATTACH_REBUILD_LOG

Command(s) completed successfully.

Would you look at that! Apparently, there was some kind of stale transaction, that somehow kept an internal lock on a system table and couldn’t be killed by regular means. The only option was to remove that transaction from the re-do log by resetting the transaction log file, which effectively left me without the latest and greatest transactions, but that’s still pretty good result!

Now that the database is proven to be restore-able without data loss inside the data file, we can try running some DBCC and see if it brings any better results. But at this point we have already recovered the database – all checks are returning no errors whatsoever; snapshots and backups are working as expected.

Running DBCC CHECKDB with repair options

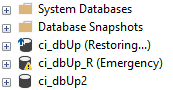

Since the database is in the recovering mode, the only way of bringing it online is to create an empty database with the exact same structure and then substitute the database files with the bad ones.

CREATE DATABASE [ci_dbUp_R] ON PRIMARY ( NAME = N'ci_dbUp', FILENAME = N'I:\MSSQL13.MSSQLSERVER\ci_dbUp_R.mdf' , SIZE = 32768KB , FILEGROWTH = 10%) LOG ON ( NAME = N'ci_dbUp_log', FILENAME = N'J:\MSSQL13.MSSQLSERVER\ci_dbUp_R_log.ldf' , SIZE = 16384KB , FILEGROWTH = 10%)

Now, replace the files with the ones I’ve saved before:

ALTER DATABASE ci_dbUp_R SET OFFLINE WITH ROLLBACK IMMEDIATE -- ...copy files... ALTER DATABASE ci_dbUp_R SET ONLINE

Time for surgery! Let’s enable emergency mode for our database:

ALTER DATABASE ci_dbUp_R SET EMERGENCY

Now it looks like this:

Confirming that the database is still in an inconsistent state:

DBCC CHECKDB ('ci_dbUp_R') WITH NO_INFOMSGS, ALL_ERRORMSGS, EXTENDED_LOGICAL_CHECKS

… and it returned no errors! Let’s try to bring it back online:

ALTER DATABASE ci_dbUp_R SET ONLINE

Command(s) completed successfully.

Awesome! We didn’t even need to run the recovery – re-attaching under a different name has completely resolved the issue.

Database recovery is always fun, as long as it’s not the production one. Stay tuned!